There are a lot of reasons to mistrust smart artefacts and services. In 2017, almost every week reports emerged about Equifax hit by data breach, affecting more than 143 million Americans.

(2017)

hacks, ransomware Windows WannaCry: This separate, ‘bigger’ malware attack also uses NSA’s exploit

(2017)

attacks, Uber concealed hack of 57 million accounts for more than a year.

(2017)

data Incident report on memory leak caused by Cloudflare parser bug.

(2017)

leaks, Uber’s latest misstep? The ‘Greyball’ program.

(2017)

unethical behaviour by technology companies and the hijacking of social media The Data That Turned the World Upside Down

(2017)

to shift public opinion. This however, has not stopped us from using smart artefacts and services yet. On the contrary. It seems we somehow have built a strong relationship with the smart artefacts and services we use and although the trust we put into this relationship has been put to the test, we are not giving op on them yet. Why do we trust these devices and services? Apart from that, what exactly is trust? Can it be designed?

Using little diaries in the form of smartphones, I tried to get to know more about the trust we put into smart artefacts and services, even we if know we should not.

To come to understand why many of us willingly use smart products and services while they know or could know that their personal data is far from safe, I conducted an experiment. Over a few months in 2016, a group of participants was asked to keep a journal on their smartphone use using a cultural probe. The ultimate goal was to have a conversation with the participants and to reflect on their personal account of the experiment.

The main reasons for choosing a cultural probe as a research tool were as follows. First, I wanted to create a mutual starting point for the experiment. As a physical artefact in stead of a digital one, the probe and the questions inside were aimed at providing a conversational piece that would give interviewer and interviewee a shared mental model on the subject. It is important to stress that the goal was not to obtain objective, quantitative data, but rather subjective, qualitative data.

The main goal was to establish a conversation with the participants, based upon a mutual starting point: the probe itself. Bill Gaver, one of the pioneers in the use of cultural probes, confirms that the use of probes during a project with elderly people in different European communities Cultural Probes | Interactions,

(Gaver B, Pacenti E, Dunne T, 1999)

helps in establishing these conversations. (Gaver, 1999:29)

Second, the probe was aimed to provide enough time to become fully aware of the individual choices and behaviour, in order to reflect on them during the interview. As the probe would be in the participant’s possession for a few weeks, this would enable the participant to grow familiar with the object and the frequency and structure of the questions and assignments inside.

Third and last, the form factor of the artefact itself was intentionally chosen to trigger enough curiosity to participate in the experiment. I aimed at creating a certain wish to be involved, because of this curiosity in the artefact. I also hoped that the probe would cause a longer attention span than a completely digital questionnaire would.

I sought to learn how much personal, intimate data people would be prepared to share via an analog diary that would be returned to me, in stead of their own phone. I wanted to see if there would be a difference in perception and if there would be a discrepancy between these two scenarios.

The journal itself was a cultural probe in the form of a smartphone, which was padlocked. Only the author and the participant had a key to that padlock. This was done to give the participant a sense of security on the data they submitted to the probe. The interface resembled a digital interface, but it was printed on paper. The data had to be submitted with a pen or pencil. The form factor resembled a digital screen interface, the interaction however, was meant to be fully analogue.

Research Probes ready for deployment

The main goal was to find out how much intimate, smartphone related data people were willing to surrender to an analogue paper dairy. I chose a paper diary, because writing in a physical diary –with pen and paper– requires a different mindset than filling out a digital questionnaire. The context is different from the digital environment, so the participant had to make a mental translation between the data submitted in the smartphone and the analogue diary. Apart from that: . I aimed at creating a conscious mindset, because the participants needed to be fully aware they were ‘submitting data’.

The questions and assignments in the probe were diverse, and as the experiment progressed, the questions became more invasive and intimate. The participants were asked to provide as much answers and data as possible, but if they felt a certain question or assignment came too close for comfort, they could rip out the page with that question. The ripped out pages would provide data as well, because these page apparently were the participant’s ‘red line’, i.e. the point where the participant would become reluctant to share personal data.

Below is a gallery with all the different pages with questions (in Dutch).

It is important to note that I was well acquainted with most of the participants. This intervention was about finding out if the participants could be made aware of possible discrepancy between data sub-consciously shared with faceless large tech companies and that same data consciously shared with someone you actually know. A total of 30 participants were asked to fill out the probe, and fifteen of them were interviewed. Some interviewees had professions that were internet-related, some were designers. Ages were ranging from 21 to 48 years. The interviewees were about 50% male, 50% female. Three participants chose to return the probe via mail. One of them refused to cooperate, as he found the questions to be ‘too intrusive’.

I interviewed 15 people, of whom a few interviews were edited into short movies. People seem to have a very personal relationship with their mobile phones. Most were somewhat aware of privacy risks, but to it for granted. They trusted these devices and services, although most of them know they should not.

The interviews (each 20-30 minutes in total) were recorded and edited to 5-10 minutes of the most interesting exchanges. The participants seemed to have a vague awareness of the risks they ran(4)while using their smartphone and other smart artefacts and services, but everyone seemed more or less fine with that. It was clear that as long they were not confronted too briskly with the fact they had shared personal data, it did not seem to bother them too much.(1)(2)(4)

Most had experienced some form of online profiling or data ending up in places they did not expect.(1)(2)(4)(4)There was awareness of contextual integrity as well, but it is interesting to see that there still is a sense of control, because of the fact the phone is considered ‘personal’.(1)They explained their reasoning as a zero-sum option: to use apps and devices for free or a discount, it felt somewhat normal to submit personal data.(1)(5)

Cultural Probe: Smartphone research – Marieke from Intimate Data on Vimeo.

Cultural Probe: Smartphone research – Amarins from Intimate Data on Vimeo.

Cultural Probe: Smartphone research – Mark & Stefan from Intimate Data on Vimeo.

Cultural Probe: Smartphone research – Douwe & Marije from Intimate Data on Vimeo.

Cultural Probe: Smartphone research – Zsa Zsa from Intimate Data on Vimeo.

When it came to privacy awareness, everyone was convinced personal privacy was important, though most(exeption)had never the read terms & conditions or privacy statements by manufacturers of apps and devices.(1)(3)The participants feel that the device is part of their own intimate world, and that they have control over it: their network, pictures, films and music is on it, so it ‘feels’ like they make the rules for the device (although they somehow now they actually don’t). The perception of safety is something that seems to be largely formed by the fact participants live in The Netherlands.(4)

What was noteworthy as well, is that participants who are internet professionals, tend to use their devices more conscious and critical than people who are not. Thorough knowledge of technology at hand makes them more aware and critical.(3)(3)(3)On the other hand, they acknowledge that it is hard to be aware of privacy all the time, especially because it is a thing you only fully understand if you have thorough knowledge of the technology.(3)Apart from that, they feel that setting the right example will help in creating new standards. If a service ‘feels’ safe, users will expect other services to provide similar safety(3)

There was is a form of cognitive dissonance present: people justify their (unintended) submission of personal data to smart artefacts and services in similar ways: they seem they have nothing to hide,(5)they have the feeling they will be able to quit any time they want or they reason they are not important enough to profile.(5)There is an amount of opportunism present as well: as long as the service provided to the participant is more beneficial than the possible drawbacks in terms of privacy infringement or the loss of autonomy; they see no reason to quit using that service.

Annoying advertisements that seem to be personalized to their profiles, do not really seem to harm them either. All in all, the amount of trust in the smart artefacts and the linked services is larger than was expected. Once being made aware of the risks, some participants would like to see more transparency when it comes to the way smart artefacts and services use their data, but fail to see a good match with user friendly interfaces.(1)(1)(5)

I discussed my findings with Esther Keymolen, who specializes in online trust. She explains that trust is always ‘blind trust’ and that trust is a way to deal with complexity.

To validate the outcomes of the probe, I discussed the subject of trust with , Assistant Professor at the Leiden School of Law and author of the book (Ph.D. Thesis) ‘Trust on the Line’ (2016). I was interested in the fact that participants regarded their smartphone as an intimate part of their lives, and did not constantly consider the fact there are a lot of companies connected to that smartphone. I was wondering where this ‘blind’ trust was based upon. Apart from that, even while there was some knowledge of the risks, people seemed to accept the trade-off between that risk and the convenience that was created through the use of these smart artefacts and services. This intrigued me and I sought to learn more about this.

In “Trust on the Line”, Keymolen explores the reasons for people to choose to interact with smart services and artefacts, without knowing much about their technical underpinnings or the true agenda of their manufacturers. She explains that this choice is related to the principle of trust, or better: blind trust. Trust is a way to interact with things and other living beings without completely having to know everything about (hidden) intentions and/or inner workings. Trust bridges a knowledge gap, Keymolen explains. “Trust is a strategy to deal with (this) complexity” Trust on the Line

(Keymolen E., 2016)

(Keymolen, 2016:61)

When it comes to smart, connected artefacts and services, not only the technology must be trusted, but the manufacturer and other relevant actors, too. This is wat Keymolen refers to as system trust. System trust, she continues, is about accepting and interacting with the larger systems in our world, such as banking systems; democracy and health care. We assume that these systems are put together well and are being maintained in such a way that contingency and risks are all but eliminated. To trust these systems and live our lives without thinking too much about contingencies or risks, creates confidence. We can act as if the future is certain (Keymolen, 2016) This confidence is necessary to interact with smart artefacts such as a smartphone. As the participants of the experiment have declared, they are in need of a certain basis of trust to interact with their smartphone. They put trust in the smartphone’s maker, the provider of installed apps and their personal network. When confronted with cognitive dissonance, such as the fact they shared way more intimate data than they first realised, they tend to try to regain confidence by assuring themselves with false assumptions (I’ve got nothing to hide’).

Smart artefacts and services such as smartphones are designed to be personalized to the needs and demands of the user. Therefore, these devices become part of our familiar world and we feel confident in using them.

Apart from the confidence developed through (perceived) system trust, the fact we are able to personalize our smart artefacts and services (our phones, social media profiles, etc.) means we can create a ‘familiar world’, a world not unlike our personal network: we connect to our friends, colleagues and family through these artefacts and services and therefore trust them with the data we share. We feel confident in using them, because they connect us to people we trust. This is where system trust de facto becomes a form of personal trust. The user influences, customizes their artefact and interface, arrange his or her contacts and curate pictures, music and movies through a user-friendly interface, and therefore tends to ‘forget’ the interface and artefact. Just as Weiser has shown us: the interface moves to the background. As humans we are interested in the goal we seek to achieve, not the interaction itself (Cooper, 2014).

In creating this ‘familiar world’, we make these artefacts and services part of our own familiar circle, our own intimate context. Because we do so via these very user-friendly interfaces and devices, we do not really notice these services and devices anymore. The familiar world is what we focus on. Just like you are not aware of using a computer when writing an email or browsing the web, you are not really aware you are using Whatsapp or Facebook when communicating with friends and relatives. While using the artefacts and services, they tend to ‘disappear’, as per Mark Weiser’s Calm Technology.

Keymolen proposes 4 elements for analyzing trust in smart artefacts and services: Context, Curation, Codification and Construction. These elements need to be seen as a interlinking set, one cannot be analyzed without observing the other.

To analyse and explain how trust in smart artefacts works and how it should be created, Keymolen has introduced a framework. This framework consists of 4 main elements, all of which are equally important when it comes to understanding this trust: Context, Curation, Codification and Construction, or the four C’s. Any of these four C’s need to be addressed when it comes to making sure a smart artefact or service can be deemed trustworthy.

If we use Keymolen’s reasoning, it is important to observe that if interface designers really want to improve trust in the smart artefacts and services we are designing, we cannot simply focus on the interface alone. We need to look at all the elements. Keymolen makes an important observation when it comes to this analysis:

“Moreover, users often are not even aware that they are visible to –and easily manipulated by– curators, putting them in a situation of invisible invisibility, reinforcing the power imbalance. This observation immediately proves the necessity to analyse trust in the context of all the C’s and not merely on the context level, which regularly happens in trust research.”

Keymolen, E. (2016) Trust on the line, Rotterdam University Press: Rotterdam, 254

Offering a possible solution for the power imbalance as mentioned above, Keymolen propagates users to become more emancipated. (Keymolen, 2016: 248) An emancipated user is a user that is aware of possible risks and is not only aware of the context, but also of the curation, codification and construction. But in essence, this would mean a user would need to learn to program and to engineer, to know how business models around smart artefacts work and become a legal expert when it comes to analysing the terms and conditions in the context of privacy regulations. This would be probably too much to ask for an average human being.

However, Keymolen does offer some clues when it comes to design cues for interfaces. She quotes Mireille Hildebrandt:

“(…) develop intuitive interfaces with which citizens can gain insight into the multiple manners in which they are ‘being read’ by their smart environments. This should give them the means to come to grips with the potential consequences.”

(Hildebrand 2013a: 19-20 in Keymolen, 2016: 249)

Keymolen makes a plea for more transparency when it comes to designing smart artefacts. This advice is aligns with the things some participants in the probe-research stated. Even more, if we would take Keymolen’s advice to create a well informed interface design process, we cannot solely focus on the element of context. When developing and engineering smart artefacts and their interfaces (context) in particular, we need to focus on the other three C’s: Curation, Codification and Construction as well. Moreover, if we want to help the user become more emancipated, we need to provide this user with means to inform himself on all the four C’s.

But how are we to do this? How are we to make more ethical, more transparent choices when designing the interfaces of smart artefacts and services if we need to address all four C’s?

There are a lot of guidelines available for designing with a more ethical mindset. Yet there are no real guidelines to create interfaces that are more trustworthy when it comes to privacy.

Cues for an ethical approach to designing ethical interfaces can be found if we read the works of Norman and Cooper. Cooper claims that digital products should not do harm in the broadest sense: physical harm, unsafety, exploitation (Cooper, 2014:169) and that a ‘well behaving product’ should ‘respect other user’s privacy’. (Cooper,2014:203). Norman focuses more on (interaction) design for responding to human error: a product should have a certain margin of error to cope with the erratic results of human input (Norman, 2013:211-212) This ‘resilient engineering’ calls for a constant assessment, testing and improvement of the design of systems, procedures, management and training in order to able to respond as problems arise (Norman, 2013:212) Both however, do not provide clear guidelines or principles on how to design and develop digital products and interfaces with the contextual integrity or data privacy of users and other affected individuals in mind.

The The Critical Engineering Manifesto

(Vasiliev D, Savičić G, Oliver J, 2011)

Critical Engineering Manifesto provides a more concrete set of rules. The manifesto propagates a holistic and somewhat sceptic approach towards engineering, where the engineer should become aware of the consequences of his work in terms of long term effects on affected humans. Towards product and interface design the 4th principle could be of use:

The Critical Engineer looks beyond the “awe of implementation” to determine methods of influence and their specific effects.

Furthermore, the critical engineer should serve “to expose moments of imbalance and deception” and “deconstruct and incite suspicion of rich user experiences.” In other words, he should provide clarity and transparency about the technology at hand. The manifesto calls for a more open approach towards the design and implementation of this technology, but in a deconstructive way: By “reconstruct user-constraints and social action through means of digital excavation.” A good example of this reconstruction of current technology at hand is the Transparency Hand Grenade. However, the manifesto as such tends to be more of an activist approach aiming at reconstruction and recontextualization of present smart artefacts instead of a constructive guideline to create usable products from an ethical point-of-view.

Edition 2, Photo by Khuong Bismuth, 2014

When it comes to guidelines for privacy centered design, the most well-know are the Privacy by Design – The 7 Foundational Principles.

(Cavoukian A, 2009)

7 principles of Privacy by Design by Ann Cavoukian. Originating from 1995 and developed in cooperation with the Dutch TNO institute, these principles provide a set of rules that should be taken into account when designing privacy-friendly smart artefacts. The rules are useful to the extent that they are widely adaptable, but at the same time they are vague: they provide little clues on exactly how the rules are to applied within an engineering process, as they have no clear indicators for successful implementation.

and Aral Bakan, both designers/activists have developed similar views. Monteiro’s talk at webstock in 2013 started off an initiative that aimed at educating designers in making the right ethical choices. A designer, he says, should always question the moral and ethical implications of an assignment, even if that meant the designer would have to turn down a design job. Both Monteiro and Bakan have put out principles that are have similarities with critical engineering manifesto and the principles of Privacy by Design. These principles are powerful in the sense that they make designers aware of the fact they carry responsibility for the work they put out in the world.

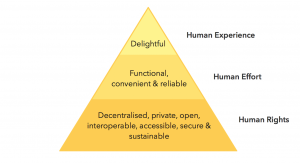

has constructed his Pyramid of Ethical Design; loosely based on the pyramid of human needs by Maslov.

Pyramid of Ethical Design – Aral Bakan

In turn, A designer’s code of ethics

(Monteiro M, 2017)

Mike Monteiro has proposed a set of 10 rules, that propose a more ethical attitude towards design in the broadest sense:

However, for designing interfaces, these principles offer not a clear starting point or indicator for success either. But they are not meant to be. Given principles are more about a designer’s attitude towards ethical design than offering concrete guidelines or design methods to help designers start a design process that provides a sense of trust for the end user.

So there is room to develop one.